New Learning MOOC’s Updates

Generative AI Trust and Validation in Education and Academic Research: Critical Analysis

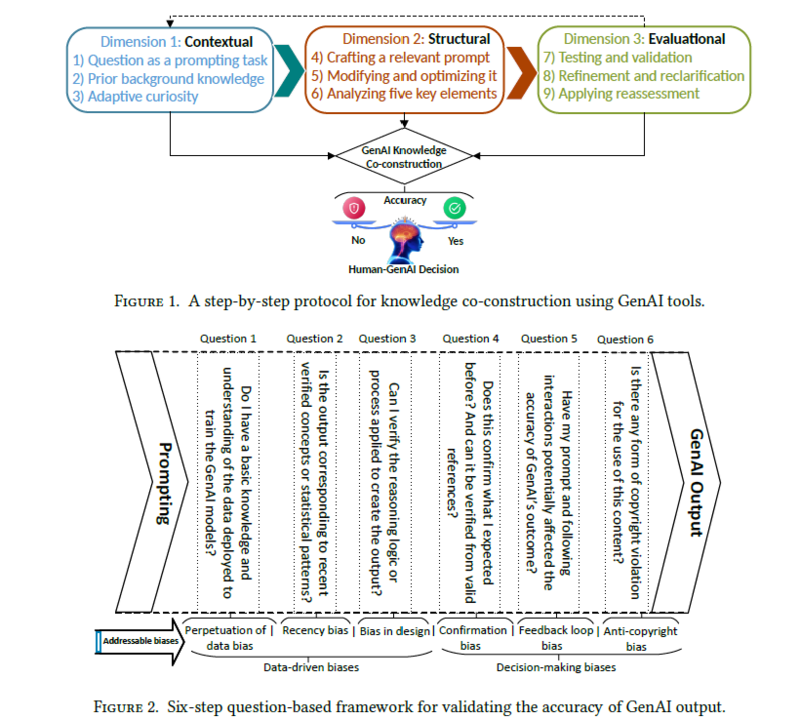

As an Assistant Professor specializing in Generative Artificial Intelligence (GenAI) applications for cybersecurity, I have observed a concerning trend: many students exhibit excessive trust in GenAI-generated content, often incorporating it directly into their academic projects and research theses without proper validation. While GenAI tools (e.g., ChatGPT-4o) undoubtedly offer valuable learning and data collection support, they also present significant risks. These tools can inadvertently amplify decision-making biases and contribute to data-driven misconceptions, potentially leading to what I term "hallucinated GenAI-human knowledge co-construction". To address this challenge, I believe it is imperative to devise and implement comprehensive digital media literacy and cyber-wellness education programs (e.g., prompt engineering protocol) to train all range of netizens to learn cyberdefense mechanisms to reduce the GenAI-associated risks, such as cyberscamming, disinformation, and misinformation. When educators or students as netizens apply GenAI tools (e.g., ChatGPT-4o) for creating content (e.g, preparing assignments), they may encounter a hallucinated GenAI-Human Knowledge Co-construction process, leading to (dis)trust misinformation. This is particularly striking because it goes beyond the typical concerns about GenAI tools' hallucination to describe a collaborative failure mode where both human and GenAI systems reinforce each other's biases and errors. This creates a dangerous feedback loop where:

- Students trust GenAI outputs without validation

- GenAI tools amplify existing human cognitive biases

- The combined system produces "hallucinated" knowledge that appears authoritative

- This false knowledge gets incorporated into academic work, creating a cycle of misinformation

To address the aforementioned risks, the use of GenAI tools in education and academic research requires deeper exploration in cyber (dis)trust contexts where the stakes of misinformation are particularly high.

However, many public policy actions, such as the EU AI Act and its educational implications, have been taken to reduce such risks in society. For example, the EU AI Act represents a landmark contemporary policy that directly addresses the concerns raised in the original material. This regulation establishes a risk-based framework for AI systems and includes specific provisions for educational applications.

The EU AI Act's Key Provisions Relevant to Cybersecurity Education:

1. Transparency Requirements: AI systems must disclose when content is AI-generated, addressing the validation challenges mentioned in the original text.

2. Human Oversight Mandates: High-risk AI applications require human supervision, directly supporting the need for critical evaluation skills.

3. Accuracy and Reliability Standards: AI systems must meet specific performance benchmarks, addressing the verification concerns raised.

In general, the following regulatory strengths and gaps for education in the EU AI Act must be considered.

- Strengths:

1 - Comprehensive risk-based approach

2 - Clear accountability mechanisms

3 - Emphasis on human-centric AI development

- Gaps for Education:

1 - Limited specific guidance for academic applications

2 - Insufficient focus on digital literacy development

3 - Missing provisions for student training in AI validation

In the following, some policy recommendations are summarized that I believe the EU AI Act should be expanded to comprise:

- Mandatory AI media literacy (or cyberwellness education) components in cybersecurity curricula

- Standardized validation protocols (e.g., interactive prompting protocol) for academic GenAI use

- Funding for digital media literacy (or cyber-wellness educational) programs

- Conclusion

The intersection of cyber(dis)trust issues and contemporary AI policy reveals opportunities and challenges for cybersecurity education in the GenAI era. The EU AI Act provides a regulatory foundation. Still, educational institutions must develop specific implementation strategies that address the unique risks of "hallucinated knowledge GenAI-human co-construction" in academic contexts.

The key insight is that we need regulatory frameworks (e.g., the EU AI Act) and educational interventions (like the proposed digital literacy programs in Ahvanooey et al. 2025) to effectively address cyber (dis)trust issues in cybersecurity education in the GenAI era.

References:

- Gstrein, O.J., Haleem, N. and Zwitter, A., 2024. General-purpose AI regulation and the European Union AI Act. Internet Policy Review, 13(3), pp.1-26.

- Symeou, L., Louca, L., Kavadella, A., Mackay, J., Danidou, Y. and Raffay, V., 2025. Development of Evidence‐Based Guidelines for the Integration of Generative AI in University Education Through a Multidisciplinary, Consensus‐Based Approach. European Journal of Dental Education.

- Ahvanooey, M.T., Mazurczyk, W. and Lee, D., 2025. Socio-Economic Threats of Deepfakes and The Role of Cyber-Wellness Education in Defense, the Communications of the ACM, Vol. 68(9), pp. 1-10

- Naghdy, F., 2025. Collaboration with GenAI in Engineering Research Design. Data & Knowledge Engineering, p.102445.

Buenas tardes, cordial saludo en parte comparto su punto de vista, pero de mi modo debe, el aprendizaje debe ser mixto tanto presencial como virtual para que se tenga los dos enfoques y haya una mejor socialización e integración del estudiante.